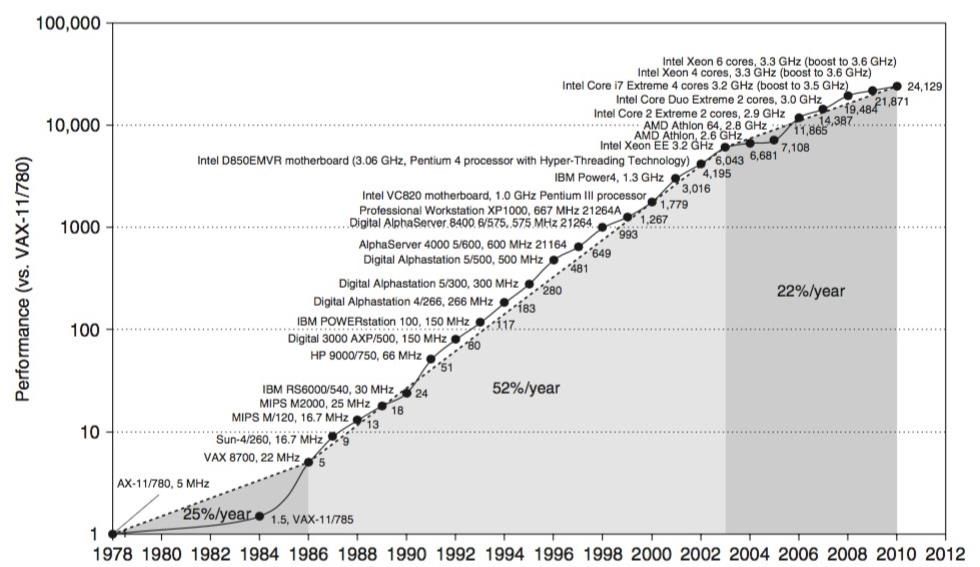

As many of you know, processor's clock frequency improvement got stuck in about 2003, causing the origin of multicore CPU (and other technologies). In this article I'll introduce you on how to run code simultaneously in various processors (I suppose that all of you have a multicore CPU).

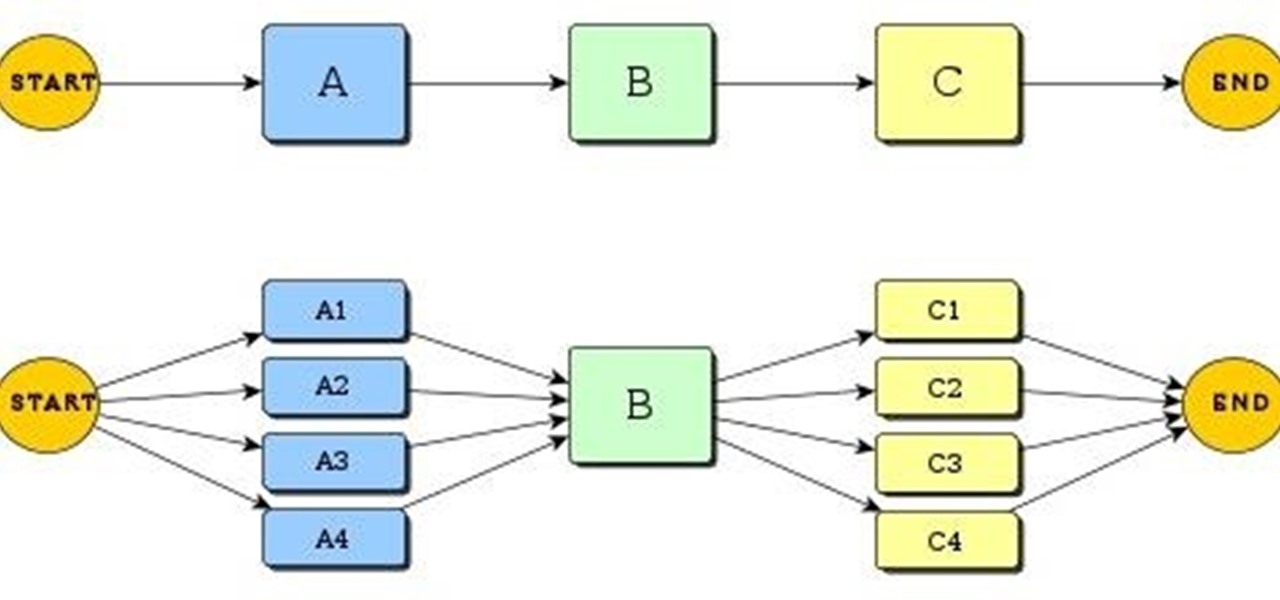

When you write code without any parallel directive, it only executes in one CPU at the same time (see it below). OpenMP make simple to work with various cores (if not with all of them) , without so much headache (forks, joins...), and let you speedup your programs linearly with the number of processors (this is not always possible).

I will be using Kali version 1 throughout this article, but I'm sure that anyone can adapt the information below to his own system (if you have any question, feel free to ask).

What Is OpenMP?

OpenMP is an Application Programming Interface to write parallel applications in shared memory systems. Provides mapping for FORTRAN, C and C++, in this article we will use it in a simple C program. A lot of tools, for example John the Ripper, use OpenMP in order to reduce execution time (critical in almost all software).

I will not cover in depth all the features of OpenMP (there's a lot of information in the official site and around Internet), but I will show how simple could be to enhance our programs.

Sequential Code

Create a new directory to not mix anything with your files:

mkdir openmp

cd openmp

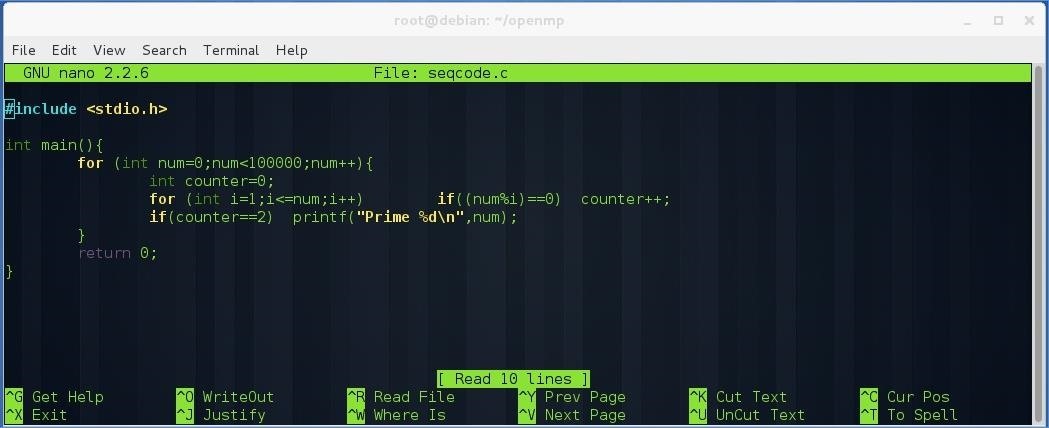

Let's write a simple program in C that gives us all prime numbers between 0 and 100000.

Open a text editor and place the above code. Save the file as seqcode.c, and compile it with the GNU compiler:

gcc -std=c99 -o seqcode seqcode.c

I have added the c99 option to declare the variables inside the loop.

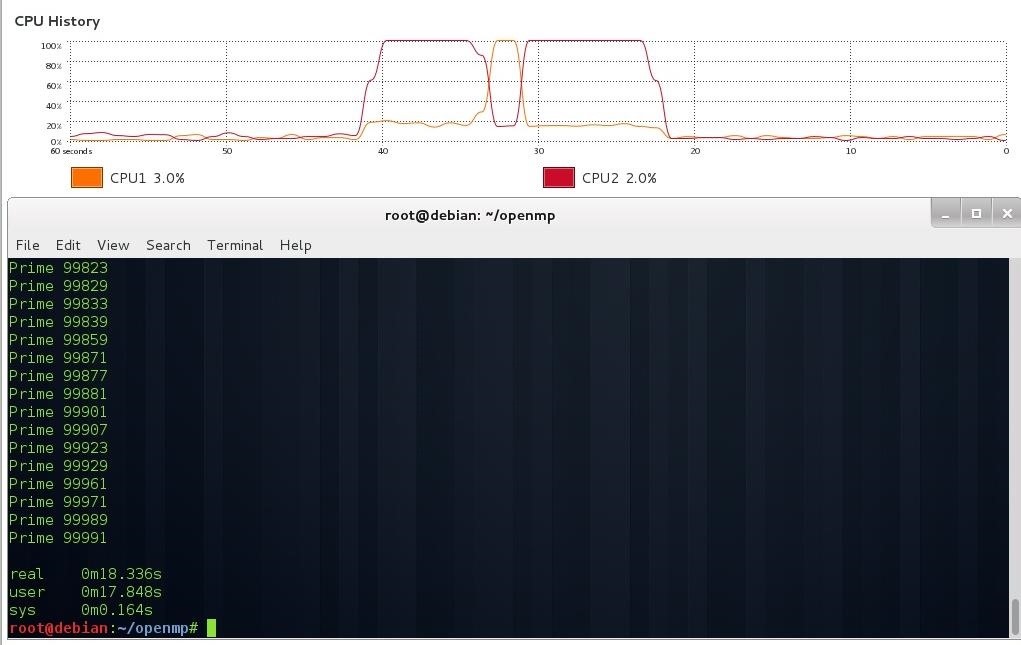

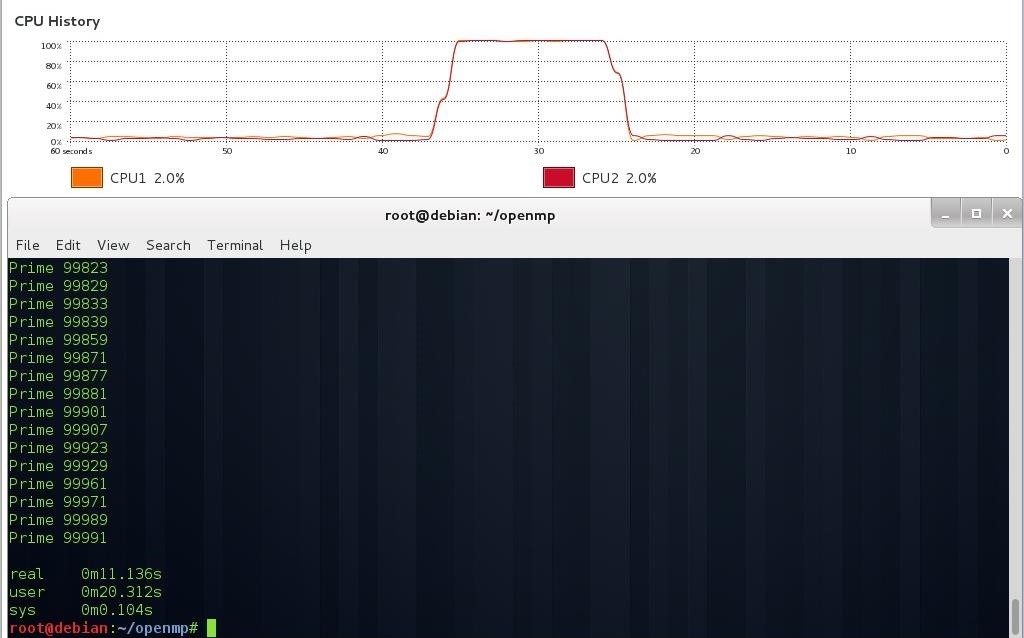

Open any system monitor (here I'm using the Kali's default system monitor) to see the CPU state while executing the program. Execute the sequential program:

time ./seqcode

As you see, the process runs in a single CPU at the same time. You can see that the process can change to another processor in execution time (this is managed by the kernel).

Parallel Code

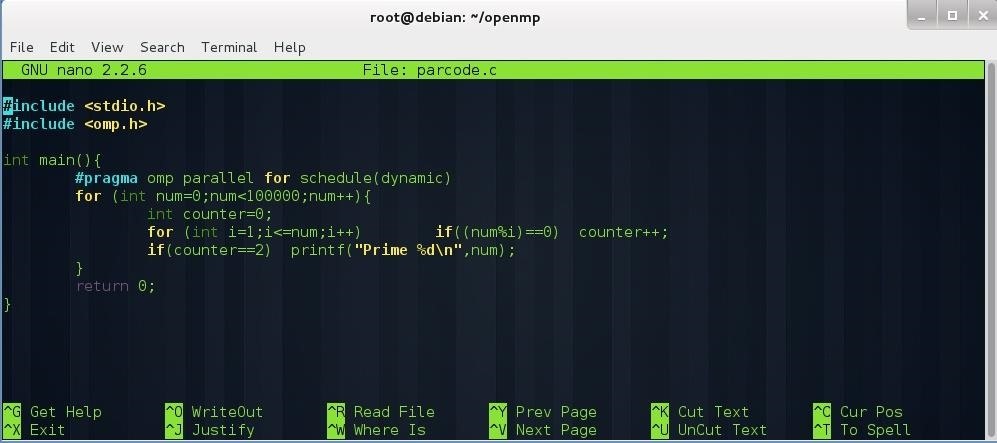

With our sequential program written, let's see how to convert it to a parallel program by adding a few lines to our code:

The basic OpenMP sintax (C/C++) is:

#pragma omp <directive> <clause/s>

First we include the OpenMP library (omp.h), next we add the next line before our loop:

#pragma omp parallel for schedule(dynamic)

Let's dissect the above line:

- #pragma omp is the default syntax for C and C++.

- parallel for is the directive. It means the next code is a for loop and can be executed in various threads.

- schedule(dynamic) this clause is used to assign iterations to each thread. I have chosen dynamic because if you select static with the default chunk size, each thread have to wait for the previous one to run, so it's a waste of time because you don't reduce the execution time.

Let's see how it works, compile the code (don't forget to add the openmp flag as below) and execute the parallel program:

gcc -std=c99 -fopenmp -o parcode parcode.c

time ./parcode

You can see the CPUs working at the same time.

Measuring the Improvement

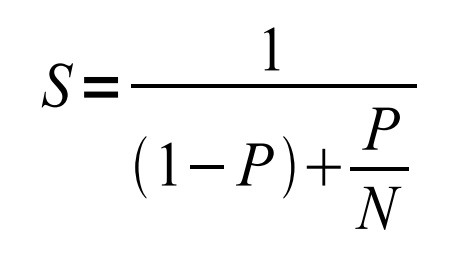

We have the time that each process has taken until finished (for that we have used the time command), so we are able to measure the speedup according to Amdahl's law (assuming that the program is fully parallelizable, so the improved portion is 100%):

Speedup of improvement = Time old / Time new

In our case we have:

Speedup of improvement = 18,336 s / 11, 136 s = 1,65

The overall speedup is given as:

- S -> Global speedup

- P -> Parallelizable part (we assume that is 100% so P=1)

- N -> Speedup of improvement (calculated before)

We have assumed that the parallelizable part is 100% so the global speedup is exactly the same as the speedup of the improvement.

This means that our program is 65% faster than the original in this machine (2 CPUs). It was a simple simulation, I have done it in a virtual machine so the time is not real. If you run the program on another machine, the speedup must vary according to the number of processors and the clock frequency of each processor.

In our case the code has been so simple to parallelize, but sometimes this is not possible due to critical variables, shared memory regions...

I hope you found this interesting! In the next article we will build a parallel password cracker with OpenMP, so keep coming!

References

Computer Architecture, 5th edition by Hennesy and Patterson.

Just updated your iPhone? You'll find new emoji, enhanced security, podcast transcripts, Apple Cash virtual numbers, and other useful features. There are even new additions hidden within Safari. Find out what's new and changed on your iPhone with the iOS 17.4 update.

5 Comments

nice job bro..

Hacked by Mr_Nakup3nda

Thanks!

I browsed through the OpenMP FAQ and it seems to be an API that does threading for you (you can correct me if I'm wrong). I've done a bit of simple threading in java and python but not in C. Cool article.

You are right. It's used to simplify parallelization, but you can do the same using pthread, or fork/join. It's more efficient if you do it in that way but takes more time.

Thanks for the article.

If you guys are interested in OpenMP and paralell progamming, let me recommend a free online course.

High Performance Scientific Computing at Coursera.org.

link https://www.coursera.org/course/scicomp

Moreover, there are many useful courses at coursera. Machine Learning and some Python course are really cool and understandable with good teachers.

Share Your Thoughts