Hey there, reader! In this tutorial we are going to explain how values are stored in variables as either signed or unsigned. Try to not get lost!

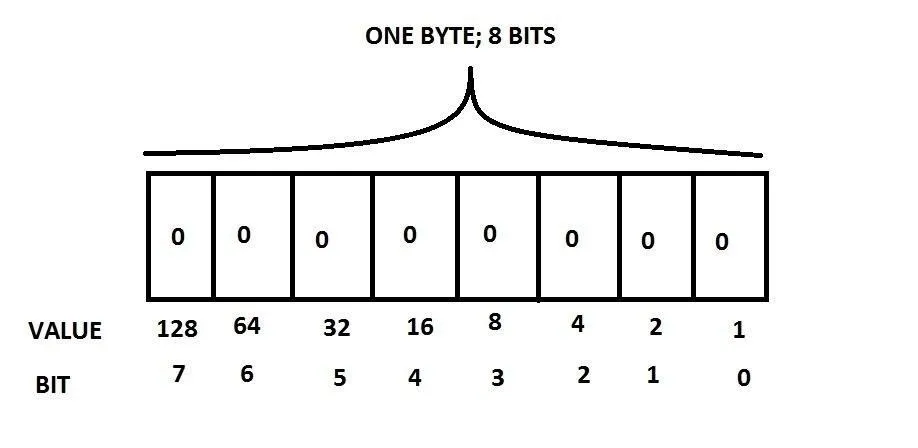

Everything is made up of bits in the computing world, like the atom makes up the basic building blocks of everyday materials such as your computer or your house. How these bits are arranged give you a certain value. Let's take a 1-byte value for example. Each byte is made up of 8 bits, so here is what 1 byte looks like:

Looking at my poorly drawn image in Paint, we can see that one byte has 8 bits from bits 0 to 7. Why start at 0, you say? Because in C (and in most things in computing), everything starts at 0 and you will see this later on. So, currently, the value is 0 because everything is 0. What the "Value" row is for is to help calculate the value of the byte. Each bit can only be 1 or 0 so if the bit is a 1, that bit contains the corresponding value in the "Value" row.

Note: If you are having trouble imagining this, try referencing one of those mechanical counters except with only 1s and 0s.

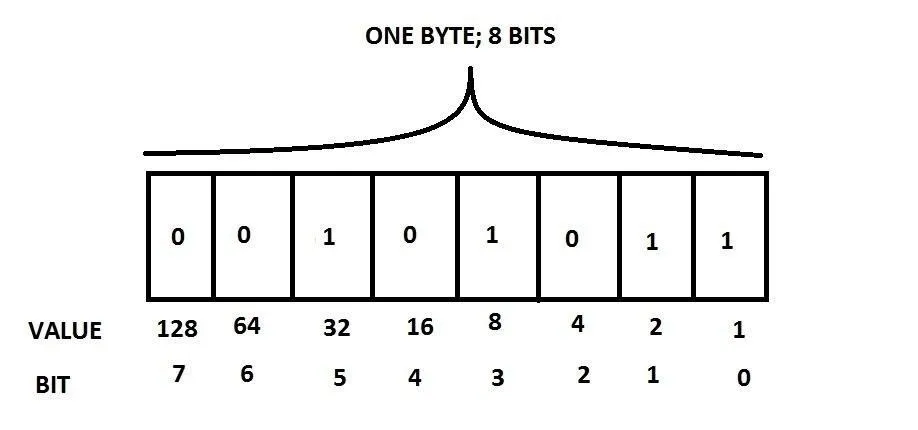

Let's do a simple example:

What is the value of this byte? Okay, so, first of all, we get each bit that has a 1 in it. Once we know which ones are 1, all we need to do is to add the value in the "Value" row so, in this one, there are 1s in the 0th, 1st, 3rd and 5th bits. Now we need to add up their values, i.e. 32 + 8 + 2 + 1 = 43. So the value of this byte is 43. Easy. Here's a question: What is the maximum number a byte can hold?

But where do these values in the "Value" row come from you ask? Looking at the "Bit" row, all we need to do to calculate the value of each bit is to exponent 2 with the bit number, i.e. 2^0 = 1, 2^1 = 2, 2^7 = 128 and so on.

The reason why signed data types are half unsigned data types is because in the signed world, the highest bit, in this case, 7, is used to represent the sign (+/-). If the highest bit is 1, the value is negative. In the unsigned world, this highest bit sign representation isn't considered and that allows for an extra bit which is actually all the other bits summed together plus one and voila!

Example Code

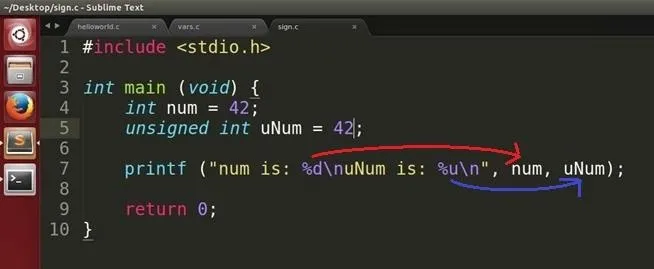

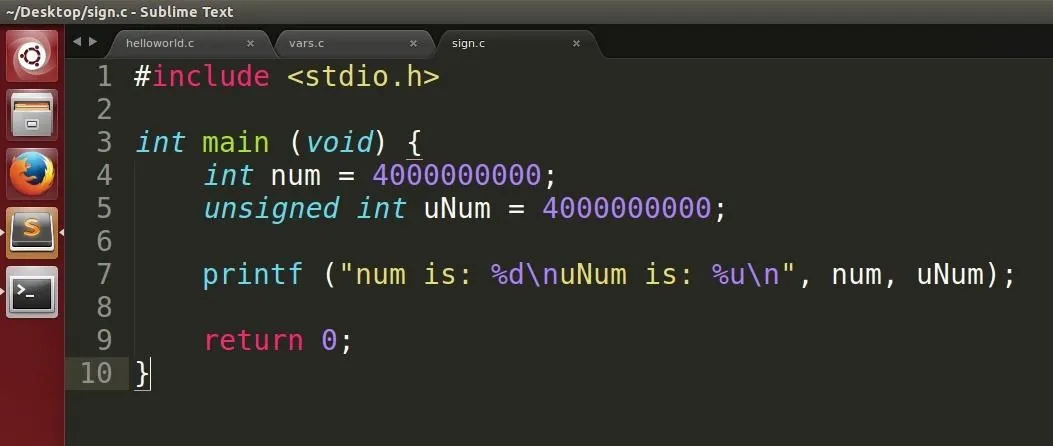

Let's see this theory in some code.

First we declare "num" as an int type with value 42 and you should know by now that this means num is signed by default. We also declare "uNum" as an int with value 42 but this time, we specified that it is an unsigned type. Is there any difference between the two? Let's compile and run!

Note: %u is used to specify an unsigned format.

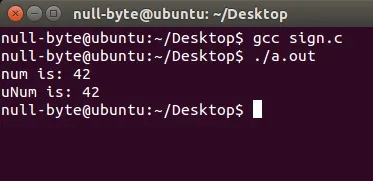

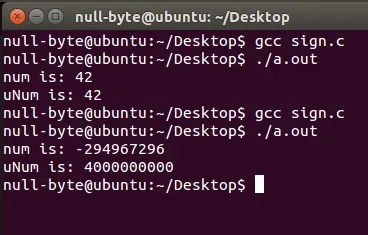

Compiling and Running

Looks like they are the same, no difference at all. This time, we will change the values of the two and see what happens...

Example Code

The variables now hold the value of 4,000,000,000. What do you think will happen?

Compiling and Running

Oops! Looks like an error has occurred! Apparently something broke and num is now some seemingly random negative value but I didn't give it this value?! What happened and why? If you guessed that the signed variable didn't have the size capacity to hold such a value then you are right. As I have previously mentioned in the last tutorial, the maximum positive value a signed integer can hold is 2^31-1, which is approximately 2,147,000,000. What this strange behavior we've just experienced is called an integer overflow meaning that the value in the variable was too great to be held and therefore "wrapped" itself back around into its negative values.

[!] Security Consequences of Integer Overflows [!]

Okay, let's switch from programmer mode to hacker mode for a second. A problem with integer overflows is that numbers are used for things such as counting. If a part of a program requires a certain integer variable to count something, say, the current number of loops in a loop (we will get into loops later), if the variable is compromised, it can cause some serious damage to the program because it is susceptible to infinite looping and therefore potentially denial of service.

Another problem with integer overflows is concerned with buffers which can invoke a vulnerability called a buffer overflow (this is a high severity case) but we will not discuss this yet (sorry!). I will explain buffers and then buffer overflows in future tutorials.

Want to know more? There are more details and some examples found on OWASP or on this Phrack article.

Okay, back to programmer mode.

Conclusion

Now we should know how numbers are stored in the form of bits and the differences between signed and unsigned data types. We also learned a bit about security which is super sweet and it's only the fourth tutorial! Until next time.

dtm.

Comments

Be the first, drop a comment!