When i scan a website with Vega , the website just go offline. I think it is because of the use of a Honeypot. May be in the robot.txt

Is there any way to scan it.

Thanks In Advance

When i scan a website with Vega , the website just go offline. I think it is because of the use of a Honeypot. May be in the robot.txt

Is there any way to scan it.

Thanks In Advance

Forum Thread:

How to Track Who Is Sms Bombing Me .

4

Replies

Forum Thread:

How to Track Who Is Sms Bombing Me .

4

Replies Forum Thread:

Removing Pay-as-You-Go Meter on Loan Phones.

1

Replies

Forum Thread:

Removing Pay-as-You-Go Meter on Loan Phones.

1

Replies Forum Thread:

Hydra Syntax Issue Stops After 16 Attempts

3

Replies

Forum Thread:

Hydra Syntax Issue Stops After 16 Attempts

3

Replies Forum Thread:

moab5.Sh Error While Running Metasploit

17

Replies

Forum Thread:

moab5.Sh Error While Running Metasploit

17

Replies Forum Thread:

Execute Reverse PHP Shell with Metasploit

1

Replies

Forum Thread:

Execute Reverse PHP Shell with Metasploit

1

Replies Forum Thread:

Install Metasploit Framework in Termux No Root Needed M-Wiz Tool

1

Replies

Forum Thread:

Install Metasploit Framework in Termux No Root Needed M-Wiz Tool

1

Replies Forum Thread:

Hack and Track People's Device Constantly Using TRAPE

35

Replies

Forum Thread:

Hack and Track People's Device Constantly Using TRAPE

35

Replies Forum Thread:

When My Kali Linux Finishes Installing (It Is Ready to Boot), and When I Try to Boot It All I Get Is a Black Screen.

8

Replies

Forum Thread:

When My Kali Linux Finishes Installing (It Is Ready to Boot), and When I Try to Boot It All I Get Is a Black Screen.

8

Replies Forum Thread:

HACK ANDROID with KALI USING PORT FORWARDING(portmap.io)

12

Replies

Forum Thread:

HACK ANDROID with KALI USING PORT FORWARDING(portmap.io)

12

Replies Forum Thread:

Hack Instagram Account Using BruteForce

208

Replies

Forum Thread:

Hack Instagram Account Using BruteForce

208

Replies Forum Thread:

Metasploit reverse_tcp Handler Problem

47

Replies

Forum Thread:

Metasploit reverse_tcp Handler Problem

47

Replies Forum Thread:

How to Train to Be an IT Security Professional (Ethical Hacker)

22

Replies

Forum Thread:

How to Train to Be an IT Security Professional (Ethical Hacker)

22

Replies Metasploit Error:

Handler Failed to Bind

41

Replies

Metasploit Error:

Handler Failed to Bind

41

Replies Forum Thread:

How to Hack Android Phone Using Same Wifi

21

Replies

Forum Thread:

How to Hack Android Phone Using Same Wifi

21

Replies How to:

HACK Android Device with TermuX on Android | Part #1 - Over the Internet [Ultimate Guide]

177

Replies

How to:

HACK Android Device with TermuX on Android | Part #1 - Over the Internet [Ultimate Guide]

177

Replies How to:

Crack Instagram Passwords Using Instainsane

36

Replies

How to:

Crack Instagram Passwords Using Instainsane

36

Replies Forum Thread:

How to Hack an Android Device Remotely, to Gain Acces to Gmail, Facebook, Twitter and More

5

Replies

Forum Thread:

How to Hack an Android Device Remotely, to Gain Acces to Gmail, Facebook, Twitter and More

5

Replies Forum Thread:

How Many Hackers Have Played Watch_Dogs Game Before?

13

Replies

Forum Thread:

How Many Hackers Have Played Watch_Dogs Game Before?

13

Replies Forum Thread:

How to Hack an Android Device with Only a Ip Adress

55

Replies

Forum Thread:

How to Hack an Android Device with Only a Ip Adress

55

Replies How to:

Sign the APK File with Embedded Payload (The Ultimate Guide)

10

Replies

How to:

Sign the APK File with Embedded Payload (The Ultimate Guide)

10

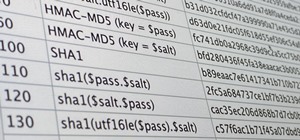

Replies Hack Like a Pro:

How to Crack Passwords, Part 3 (Using Hashcat)

Hack Like a Pro:

How to Crack Passwords, Part 3 (Using Hashcat)

How To:

Use Burp & FoxyProxy to Easily Switch Between Proxy Settings

How To:

Use Burp & FoxyProxy to Easily Switch Between Proxy Settings

How To:

Crack Password-Protected Microsoft Office Files, Including Word Docs & Excel Spreadsheets

How To:

Crack Password-Protected Microsoft Office Files, Including Word Docs & Excel Spreadsheets

Hack Like a Pro:

How to Crack User Passwords in a Linux System

Hack Like a Pro:

How to Crack User Passwords in a Linux System

How To:

Scan for Vulnerabilities on Any Website Using Nikto

How To:

Scan for Vulnerabilities on Any Website Using Nikto

How To:

Find Identifying Information from a Phone Number Using OSINT Tools

How To:

Find Identifying Information from a Phone Number Using OSINT Tools

How To:

Build a Beginner Hacking Kit with the Raspberry Pi 3 Model B+

How To:

Build a Beginner Hacking Kit with the Raspberry Pi 3 Model B+

How To:

Use Hash-Identifier to Determine Hash Types for Password Cracking

How To:

Use Hash-Identifier to Determine Hash Types for Password Cracking

How To:

Spy on Your "Buddy's" Network Traffic: An Intro to Wireshark and the OSI Model

How To:

Spy on Your "Buddy's" Network Traffic: An Intro to Wireshark and the OSI Model

How To:

Use Leaked Password Databases to Create Brute-Force Wordlists

How To:

Use Leaked Password Databases to Create Brute-Force Wordlists

How To:

Perform Local Privilege Escalation Using a Linux Kernel Exploit

How To:

Perform Local Privilege Escalation Using a Linux Kernel Exploit

Hack Like a Pro:

Finding Potential SUID/SGID Vulnerabilities on Linux & Unix Systems

Hack Like a Pro:

Finding Potential SUID/SGID Vulnerabilities on Linux & Unix Systems

Locking Down Linux:

Harden Sudo Passwords to Defend Against Hashcat Attacks

Locking Down Linux:

Harden Sudo Passwords to Defend Against Hashcat Attacks

How To:

Find Vulnerable Webcams Across the Globe Using Shodan

How To:

Find Vulnerable Webcams Across the Globe Using Shodan

How To:

Top 10 Things to Do After Installing Kali Linux

How To:

Top 10 Things to Do After Installing Kali Linux

How To:

Use Kismet to Watch Wi-Fi User Activity Through Walls

How To:

Use Kismet to Watch Wi-Fi User Activity Through Walls

How To:

Extract Bitcoin Wallet Addresses & Balances from Websites with SpiderFoot CLI

How To:

Extract Bitcoin Wallet Addresses & Balances from Websites with SpiderFoot CLI

How To:

Use Metasploit's WMAP Module to Scan Web Applications for Common Vulnerabilities

How To:

Use Metasploit's WMAP Module to Scan Web Applications for Common Vulnerabilities

How To:

Dox Anyone

How To:

Dox Anyone

How To:

Intercept Images from a Security Camera Using Wireshark

How To:

Intercept Images from a Security Camera Using Wireshark

7 Responses

What do you mean by "the website just go offline"? Like you just cannot reach it anymore?

If it was a honeypot it wouldn't necessarily be blocking you, it would be tracking you (kind of).

The robot.txt doesn't block you either. It simply tells legitimate web crawlers not to crawl certain pages or resources.

More than likely the site is deploying some sort of defense against automated scans. Like a WAF (Web Application Firewall) of some sorts. Automated scanners tend to be loud, fast and hard. This makes it easy for defense mechanisms to tell the difference between real user traffic and automated scans.

Then How can i scan that website

Well you should know some websites will go offline because the scan may be doing a DoS on the site as a side effect.

Comes back online shortly after scanning stops?

And yes 1 boxen scanning a site can DoS it.

What do you mean by "the website just go offline"? Like you just cannot reach it anymore? or the site actually goes down?

More information would be needed to try and help you. Depending on the security measures being used you may have to adjust speed and aggressiveness of the scan. You could always manually scan the site.

try changing the user agent.

and the speed of the scan, try a stealth scan on nmap

Share Your Thoughts