When i scan a website with Vega , the website just go offline. I think it is because of the use of a Honeypot. May be in the robot.txt

Is there any way to scan it.

Thanks In Advance

When i scan a website with Vega , the website just go offline. I think it is because of the use of a Honeypot. May be in the robot.txt

Is there any way to scan it.

Thanks In Advance

Forum Thread:

When My Kali Linux Finishes Installing (It Is Ready to Boot), and When I Try to Boot It All I Get Is a Black Screen.

8

Replies

Forum Thread:

When My Kali Linux Finishes Installing (It Is Ready to Boot), and When I Try to Boot It All I Get Is a Black Screen.

8

Replies Forum Thread:

HACK ANDROID with KALI USING PORT FORWARDING(portmap.io)

12

Replies

Forum Thread:

HACK ANDROID with KALI USING PORT FORWARDING(portmap.io)

12

Replies Forum Thread:

Hydra Syntax Issue Stops After 16 Attempts

2

Replies

Forum Thread:

Hydra Syntax Issue Stops After 16 Attempts

2

Replies Forum Thread:

Hack Instagram Account Using BruteForce

208

Replies

Forum Thread:

Hack Instagram Account Using BruteForce

208

Replies Forum Thread:

Metasploit reverse_tcp Handler Problem

47

Replies

Forum Thread:

Metasploit reverse_tcp Handler Problem

47

Replies Forum Thread:

How to Train to Be an IT Security Professional (Ethical Hacker)

22

Replies

Forum Thread:

How to Train to Be an IT Security Professional (Ethical Hacker)

22

Replies Metasploit Error:

Handler Failed to Bind

41

Replies

Metasploit Error:

Handler Failed to Bind

41

Replies Forum Thread:

How to Hack Android Phone Using Same Wifi

21

Replies

Forum Thread:

How to Hack Android Phone Using Same Wifi

21

Replies How to:

HACK Android Device with TermuX on Android | Part #1 - Over the Internet [Ultimate Guide]

177

Replies

How to:

HACK Android Device with TermuX on Android | Part #1 - Over the Internet [Ultimate Guide]

177

Replies How to:

Crack Instagram Passwords Using Instainsane

36

Replies

How to:

Crack Instagram Passwords Using Instainsane

36

Replies Forum Thread:

How to Hack an Android Device Remotely, to Gain Acces to Gmail, Facebook, Twitter and More

5

Replies

Forum Thread:

How to Hack an Android Device Remotely, to Gain Acces to Gmail, Facebook, Twitter and More

5

Replies Forum Thread:

How Many Hackers Have Played Watch_Dogs Game Before?

13

Replies

Forum Thread:

How Many Hackers Have Played Watch_Dogs Game Before?

13

Replies Forum Thread:

How to Hack an Android Device with Only a Ip Adress

55

Replies

Forum Thread:

How to Hack an Android Device with Only a Ip Adress

55

Replies How to:

Sign the APK File with Embedded Payload (The Ultimate Guide)

10

Replies

How to:

Sign the APK File with Embedded Payload (The Ultimate Guide)

10

Replies Forum Thread:

How to Run and Install Kali Linux on a Chromebook

18

Replies

Forum Thread:

How to Run and Install Kali Linux on a Chromebook

18

Replies Forum Thread:

How to Find Admin Panel Page of a Website?

13

Replies

Forum Thread:

How to Find Admin Panel Page of a Website?

13

Replies Forum Thread:

can i run kali lenux in windows 10 without reboting my computer

4

Replies

Forum Thread:

can i run kali lenux in windows 10 without reboting my computer

4

Replies Forum Thread:

How to Hack School Website

11

Replies

Forum Thread:

How to Hack School Website

11

Replies Forum Thread:

Make a Phishing Page for Harvesting Credentials Yourself

8

Replies

Forum Thread:

Make a Phishing Page for Harvesting Credentials Yourself

8

Replies Forum Thread:

Creating an Completely Undetectable Executable in Under 15 Minutes!

38

Replies

Forum Thread:

Creating an Completely Undetectable Executable in Under 15 Minutes!

38

Replies How To:

Use Burp & FoxyProxy to Easily Switch Between Proxy Settings

How To:

Use Burp & FoxyProxy to Easily Switch Between Proxy Settings

How To:

Scan for Vulnerabilities on Any Website Using Nikto

How To:

Scan for Vulnerabilities on Any Website Using Nikto

How to Hack Wi-Fi:

Cracking WPA2 Passwords Using the New PMKID Hashcat Attack

How to Hack Wi-Fi:

Cracking WPA2 Passwords Using the New PMKID Hashcat Attack

How To:

Get Root with Metasploit's Local Exploit Suggester

How To:

Get Root with Metasploit's Local Exploit Suggester

How To:

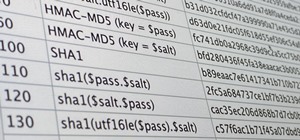

Use Hash-Identifier to Determine Hash Types for Password Cracking

How To:

Use Hash-Identifier to Determine Hash Types for Password Cracking

How To:

Generate Crackable Wi-Fi Handshakes with an ESP8266-Based Test Network

How To:

Generate Crackable Wi-Fi Handshakes with an ESP8266-Based Test Network

How To:

Crack SSH Private Key Passwords with John the Ripper

How To:

Crack SSH Private Key Passwords with John the Ripper

How To:

Crack Shadow Hashes After Getting Root on a Linux System

How To:

Crack Shadow Hashes After Getting Root on a Linux System

How To:

Find Identifying Information from a Phone Number Using OSINT Tools

How To:

Find Identifying Information from a Phone Number Using OSINT Tools

Tutorial:

Create Wordlists with Crunch

Tutorial:

Create Wordlists with Crunch

Hacking Windows 10:

How to Dump NTLM Hashes & Crack Windows Passwords

Hacking Windows 10:

How to Dump NTLM Hashes & Crack Windows Passwords

How To:

Scrape Target Email Addresses with TheHarvester

How To:

Scrape Target Email Addresses with TheHarvester

Steganography:

How to Hide Secret Data Inside an Image or Audio File in Seconds

Steganography:

How to Hide Secret Data Inside an Image or Audio File in Seconds

How To:

Use Metasploit's WMAP Module to Scan Web Applications for Common Vulnerabilities

How To:

Use Metasploit's WMAP Module to Scan Web Applications for Common Vulnerabilities

Android for Hackers:

How to Turn an Android Phone into a Hacking Device Without Root

Android for Hackers:

How to Turn an Android Phone into a Hacking Device Without Root

How to Use PowerShell Empire:

Generating Stagers for Post Exploitation of Windows Hosts

How to Use PowerShell Empire:

Generating Stagers for Post Exploitation of Windows Hosts

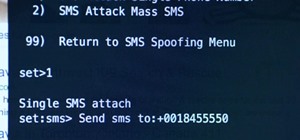

The Hacks of Mr. Robot:

How to Send a Spoofed SMS Text Message

The Hacks of Mr. Robot:

How to Send a Spoofed SMS Text Message

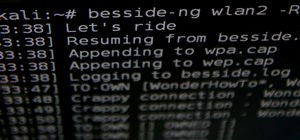

How to Hack Wi-Fi:

Automating Wi-Fi Hacking with Besside-ng

How to Hack Wi-Fi:

Automating Wi-Fi Hacking with Besside-ng

How To:

Load Kali Linux on the Raspberry Pi 4 for the Ultimate Miniature Hacking Station

How To:

Load Kali Linux on the Raspberry Pi 4 for the Ultimate Miniature Hacking Station

How To:

Fingerprint Web Apps & Servers for Better Recon & More Successful Hacks

How To:

Fingerprint Web Apps & Servers for Better Recon & More Successful Hacks

7 Responses

What do you mean by "the website just go offline"? Like you just cannot reach it anymore?

If it was a honeypot it wouldn't necessarily be blocking you, it would be tracking you (kind of).

The robot.txt doesn't block you either. It simply tells legitimate web crawlers not to crawl certain pages or resources.

More than likely the site is deploying some sort of defense against automated scans. Like a WAF (Web Application Firewall) of some sorts. Automated scanners tend to be loud, fast and hard. This makes it easy for defense mechanisms to tell the difference between real user traffic and automated scans.

Then How can i scan that website

Well you should know some websites will go offline because the scan may be doing a DoS on the site as a side effect.

Comes back online shortly after scanning stops?

And yes 1 boxen scanning a site can DoS it.

What do you mean by "the website just go offline"? Like you just cannot reach it anymore? or the site actually goes down?

More information would be needed to try and help you. Depending on the security measures being used you may have to adjust speed and aggressiveness of the scan. You could always manually scan the site.

try changing the user agent.

and the speed of the scan, try a stealth scan on nmap

Share Your Thoughts